Confronting NVLink? Technology giants such as Intel, AMD, Microsoft, and Broadcom form UALink

Eight tech giants, including Intel, Google, Microsoft, and Meta, are establishing a new industry group called the UALink Promotion Group to guide the development of components connecting AI accelerator chips within data centers.

The UALink promotion team was announced to be established on Thursday, and its members also include AMD, HP Enterprise, Broadcom, and Cisco. The group has proposed a new industry standard for connecting an increasing number of AI accelerator chips in servers. In a broad sense, an AI accelerator is a chip designed from a GPU to a customized solution for accelerating the training, fine-tuning, and operation of AI models.

"The industry needs an open standard that can be quickly pushed forward, allowing multiple companies to add value to the entire ecosystem in an open format." "The industry needs a standard that allows innovation to take place at a fast pace without being constrained by any single company," said Forrest Norrod, General Manager of AMD Data Center Solutions, in a briefing on Wednesday

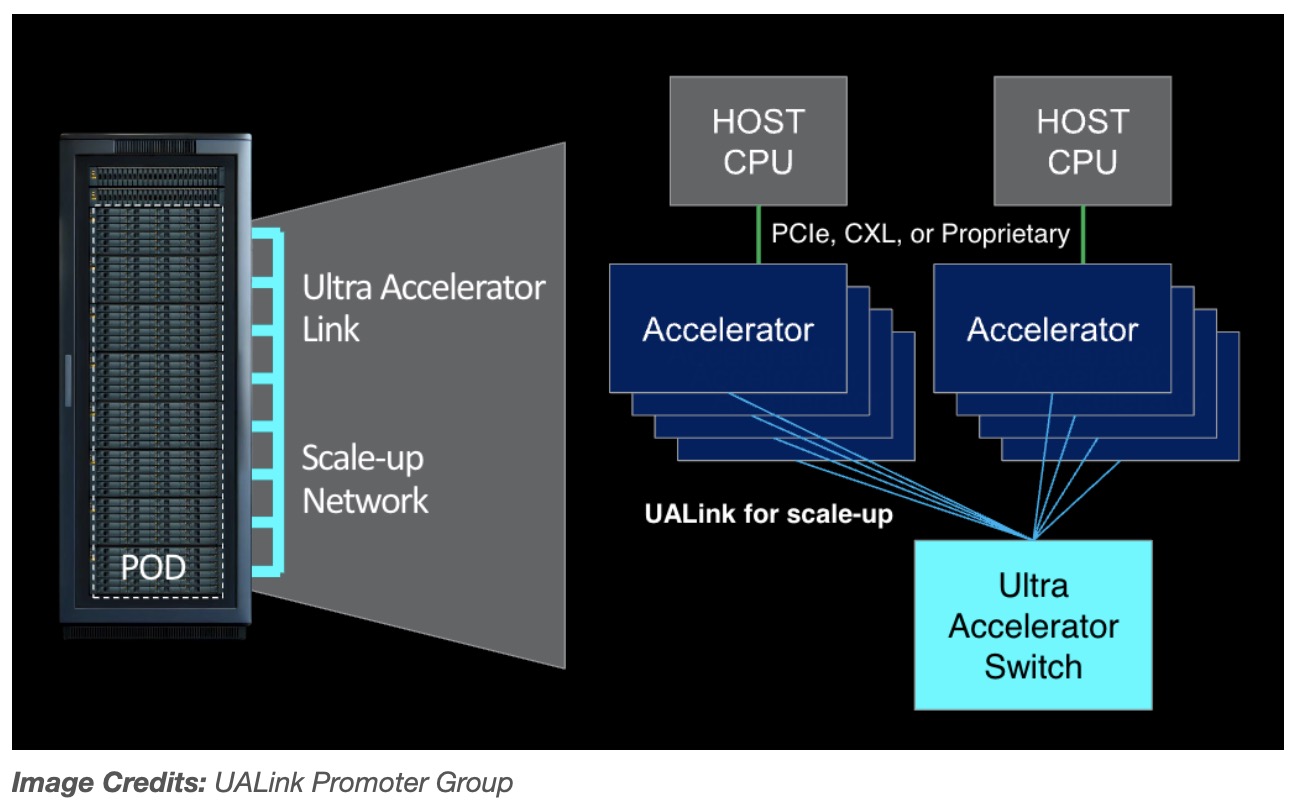

The first version of the proposed standard, UALink 1.0, Up to 1024 AI accelerators will be connected in a single computing pod - GPU only. (The group defines pod as one or several racks in a server.) UALink 1.0 is based on "open standards" and includes AMD's infinite architecture. It will allow for direct loading and storage of additional memory between AI accelerators, and overall improve speed and reduce data transmission latency compared to existing interconnect specifications.

The group stated that it will create an alliance, the UALink Alliance, in the third quarter to oversee the future development of UALink standards. UALink 1.0 will be provided to companies joining the alliance during the same period, with a higher bandwidth update specification for UALink 1.1. It is planned to be launched in the fourth quarter of 2024.

The first batch of UALink products will be launched "in the coming years," Norrod said.

Nvidia, the largest AI accelerator manufacturer to date, with an estimated market share of 80% to 95%, is clearly absent from the list of members of the group. Nvidia declined to comment on this. But it is not difficult to see why this chip manufacturer is not keen on supporting specifications based on competitor technology.

Firstly, Nvidia provides its own proprietary interconnect technology for GPUs within its data center servers. The company may not be willing to support standards based on competitor technology.

Then comes the fact that Nvidia operates from a position of immense strength and influence.

In Nvidia's most recent fiscal quarter (Q1 2025), data center sales, including its AI chip sales, increased by over 400% compared to the same period last year. If Nvidia continues its current development trajectory, it will surpass Apple at some point this year to become the world's most valuable company.

Therefore, in simple terms, if Nvidia does not want to participate, it does not need to participate.

As for Amazon Web Services (AWS), which is the only public cloud giant that has not contributed to UALink, it may be in a wait-and-see mode as it is reducing its various internal accelerator hardware efforts. It could also be AWS, relying on its control over the cloud service market and not seeing any strategic significance in opposing Nvidia, which provided most of its GPUs to its customers.

AWS did not respond to TechCrunch's comment request.

In fact, the biggest beneficiaries of UALink - aside from AMD and Intel - seem to be Microsoft, Meta, and Google, who have spent billions of dollars to purchase Nvidia GPUs to drive their clouds and train their growing AI models. Everyone wants to get rid of a supplier they see as overly dominant in the AI hardware ecosystem.

Google has custom chips, TPU, and Axion for training and running AI models. Amazon has several AI chip families. Microsoft joined the competition between Maia and Cobalt last year. Meta is improving its accelerator series.

Meanwhile, Microsoft and its close partner OpenAI reportedly plan to spend at least $100 billion on a supercomputer to train AI models, which will be equipped with future Cobalt and Maia chips. These chips will need something to connect them together - perhaps it will be UALink.

"The industry needs an open standard that can be quickly pushed forward, allowing multiple companies to add value to the entire ecosystem in an open format." "The industry needs a standard that allows innovation to take place at a fast pace without being constrained by any single company," said Forrest Norrod, General Manager of AMD Data Center Solutions, in a briefing on Wednesday

The first version of the proposed standard, UALink 1.0, Up to 1024 AI accelerators will be connected in a single computing pod - GPU only. (The group defines pod as one or several racks in a server.) UALink 1.0 is based on "open standards" and includes AMD's infinite architecture. It will allow for direct loading and storage of additional memory between AI accelerators, and overall improve speed and reduce data transmission latency compared to existing interconnect specifications.

The group stated that it will create an alliance, the UALink Alliance, in the third quarter to oversee the future development of UALink standards. UALink 1.0 will be provided to companies joining the alliance during the same period, with a higher bandwidth update specification for UALink 1.1. It is planned to be launched in the fourth quarter of 2024.

The first batch of UALink products will be launched "in the coming years," Norrod said.

Nvidia, the largest AI accelerator manufacturer to date, with an estimated market share of 80% to 95%, is clearly absent from the list of members of the group. Nvidia declined to comment on this. But it is not difficult to see why this chip manufacturer is not keen on supporting specifications based on competitor technology.

Firstly, Nvidia provides its own proprietary interconnect technology for GPUs within its data center servers. The company may not be willing to support standards based on competitor technology.

Then comes the fact that Nvidia operates from a position of immense strength and influence.

In Nvidia's most recent fiscal quarter (Q1 2025), data center sales, including its AI chip sales, increased by over 400% compared to the same period last year. If Nvidia continues its current development trajectory, it will surpass Apple at some point this year to become the world's most valuable company.

Therefore, in simple terms, if Nvidia does not want to participate, it does not need to participate.

As for Amazon Web Services (AWS), which is the only public cloud giant that has not contributed to UALink, it may be in a wait-and-see mode as it is reducing its various internal accelerator hardware efforts. It could also be AWS, relying on its control over the cloud service market and not seeing any strategic significance in opposing Nvidia, which provided most of its GPUs to its customers.

AWS did not respond to TechCrunch's comment request.

In fact, the biggest beneficiaries of UALink - aside from AMD and Intel - seem to be Microsoft, Meta, and Google, who have spent billions of dollars to purchase Nvidia GPUs to drive their clouds and train their growing AI models. Everyone wants to get rid of a supplier they see as overly dominant in the AI hardware ecosystem.

Google has custom chips, TPU, and Axion for training and running AI models. Amazon has several AI chip families. Microsoft joined the competition between Maia and Cobalt last year. Meta is improving its accelerator series.

Meanwhile, Microsoft and its close partner OpenAI reportedly plan to spend at least $100 billion on a supercomputer to train AI models, which will be equipped with future Cobalt and Maia chips. These chips will need something to connect them together - perhaps it will be UALink.